In property underwriting, insurance carriers and real estate firms wield a powerful tool – data. At the heart of their decision-making process lies a treasure trove of information that delves into the property’s history. This data-driven approach is pivotal for commercial underwriting purposes, allowing these key players to make well-informed choices regarding coverage and risk assessment.

We will explore why and how property permits and the condition of a property shaping domain of insurance to the next level:

Property history serves as the canvas upon which insurance carriers evaluate risk. Whether a property is well-maintained or has seen numerous modifications significantly impacts insurance decisions. Property permits offer insights into a property’s adherence to local regulations and safety standards. Insurance companies scrutinize these permits to assess potential liabilities and non-compliance issues. Property underwriting is assessing a property’s risk for insurance purposes. This assessment guides insurance carriers in setting appropriate premiums. When evaluating a property for underwriting, carriers consider various components related to building permits beyond just the roof.

Significant structural changes, such as additions or extensions, are evaluated for their impact on a property’s integrity and safety. The condition and compliance of plumbing and electrical systems are assessed, as issues in these areas can lead to hazards like fires or water damage. Compliance with local building codes and safety regulations is crucial. Non-compliance can raise risks and affect insurance coverage and premiums.

The roof is a crucial focus. Insurance carriers consider the roof’s age and condition when assessing risk. A newer roof, as indicated by a recent permit, suggests lower risk, while an older permit may indicate a need for repairs or replacement, impacting insurance terms and premiums.

Data Engineering for Insurance Domain

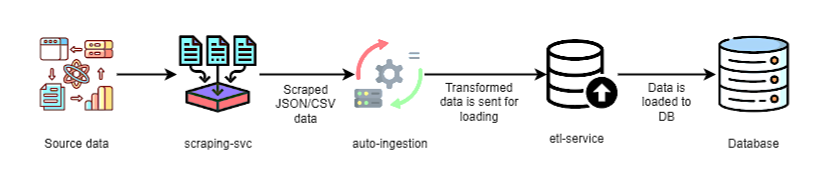

Data engineering is really important for handling and improving building permit data. It involves ETL, which stands for extracting, transforming, and loading. This process takes a large and varied set of data and makes it clean, standardized, and ready for analysis. Data engineering ensures that the data is of good quality and brings together information from different places, like government databases and local authorities, into one place, like a big cloud storage.

The reason why data engineering is so crucial for building permit data is that it helps people like insurance companies, real estate firms, and construction contractors to do their jobs better. It allows us to quickly and correctly analyze the data. It also sets up a way to manage big amounts of data from all 52 states in the U.S. It keeps the data safe and makes it easy for us to use in making important decisions.

The pipeline streamlines collecting, processing, and managing building permit data for the US Insurance industry. It emphasizes automation, data quality, and efficient data retrieval to support insurance companies in making informed decisions related to property assessments and risk management.

Certainly, let’s delve into the features of this Data Engineering Pipeline:

Efficient Data Scraping

This mechanism allows for the concurrent execution of tasks without continuous manual oversight. The multiple scraping tasks can run simultaneously, improving efficiency and speed. The pipeline is scraping permit data from different counties or cities across multiple states and ingesting it in the DB. Data scraping is optimized to complete within 4 minutes when the data is available at the source. For example, if a user requests permit data for a specific address, the pipeline will check if the data is available at the source (e.g., a municipal website) and, if so, scrape the relevant information within 4 minutes.

Geocoding with High Accuracy

The pipeline accurately associates geographic coordinates with property addresses. For example, if a permit data source provides address information, the pipeline can accurately determine the latitude and longitude coordinates for each property. This enables precise mapping and spatial analysis.

Better Data Management

To ensure data quality, the pipeline prevents the entry of duplicate data using a combination of three business features and OLAP CTID mechanisms. If the same permit data is accidentally scraped multiple times, the pipeline detects and eliminates duplicates based on unique criteria, ensuring a clean and reliable dataset.

The pipeline regularly backs up data to the cloud, ensuring data preservation and availability. In the event of a system failure or data loss, the pipeline can retrieve the last backup to restore missing or corrupted data, maintaining data integrity.

Application of the Data at Business

Contractors

Contractors use data analysis to optimize their services. They analyze locality data, including building permits, to gauge demand in specific areas. By mapping customers to localities using geospatial technology, they can identify opportunities for tailored services and upselling. Contractors proactively target high-permit areas, increasing customer engagement and meeting active market needs. This data-driven approach guides resource allocation, marketing, and decision-making, resulting in increased efficiency and profitability. Data analysis also helps track the success of initiatives, enabling ongoing strategy refinement and operational optimization.

Real Estate

Real estate firms leverage property data to assess a property’s condition, incorporating details like age, maintenance, and renovations to determine its value and listing price. A well-maintained property may command a higher price, while those in need of repairs adjust accordingly, ensuring accurate pricing. Additionally, customer data helps real estate companies provide personalized service. By utilizing client preferences and transaction history, they offer tailored property recommendations, aiding informed decision-making and building trust with clients.

In the insurance industry, a well-structured ETL pipeline is needed for accurate risk assessment, underwriting, and claims processing. So that insurance companies can make data-driven decisions, improve operational efficiency, and ensure compliance with regulatory requirements.